High-Speed, High-Precision Visual Localization via Integrated Local Feature Aggregation

- Post by: admin

- 2025-03-27

- No Comment

Visual localization is a critical task in many computer vision applications such as Structure-from-Motion (SfM) and SLAM, as it involves estimating the 6-DoF camera pose. Traditional approaches extract global features for image retrieval and local features for precise pose estimation using separate networks. This separation results in high computational costs and significant memory consumption, posing challenges for practical deployment in large-scale environments and under complex imaging conditions.

Proposed Method

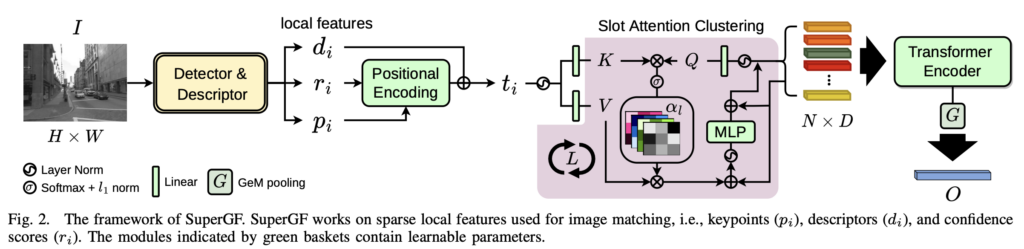

This paper proposes a novel approach called “SuperGF” that efficiently generates global features from local features. First, local features—including keypoints, descriptors, and confidence scores—are extracted from the input image using methods like SuperPoint. For each local feature, tokens are generated by incorporating additional information such as image position and confidence. Next, a slot attention mechanism is applied to these tokens to extract representative cluster centers, effectively summarizing the characteristics of various local regions while reducing the computational load. Finally, a Transformer encoder is used to promote interactions among the tokens, and GeM pooling aggregates the information into a unified global feature vector. This global feature achieves accuracy comparable to or exceeding that of conventional global feature extraction networks, all while significantly improving efficiency by reusing the computed local features.

Experimental Results

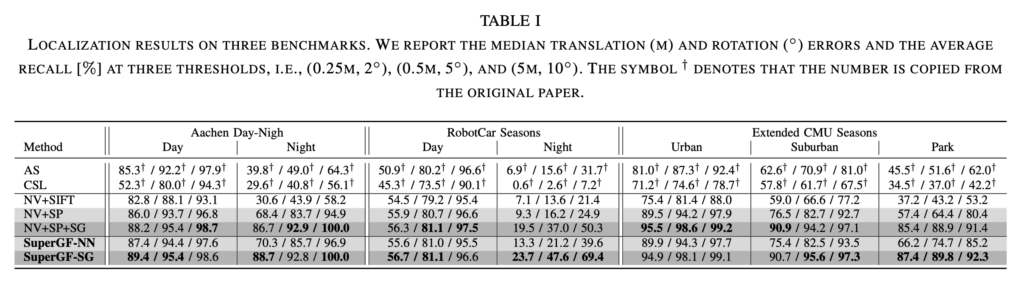

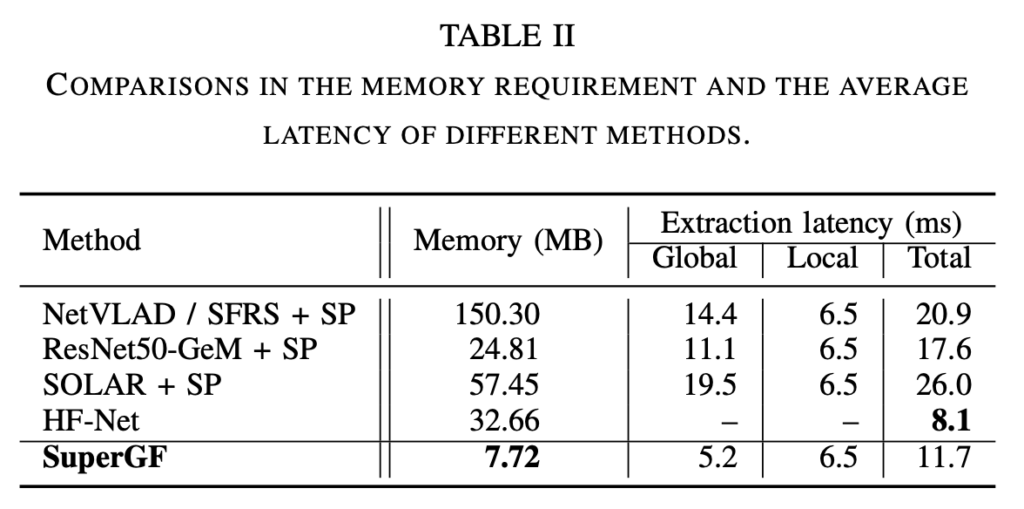

The proposed method is evaluated on several benchmark datasets, including Aachen Day-Night, Extended CMU, and RobotCar Seasons, where it is compared with existing methods for both image retrieval and camera pose estimation. Experimental results demonstrate that SuperGF attains performance equal to or better than conventional approaches—such as those using NetVLAD or separate extraction of local and global features—while greatly reducing computation time and memory usage. In particular, high repeatability is maintained even under challenging conditions, such as nighttime scenes, variable weather, and seasonal changes, highlighting its adaptability to visual place recognition (VPR) tasks.

Conclusion

This paper introduces “SuperGF,” a new method that leverages the outcomes of local feature extraction to efficiently generate robust global features, thereby substantially reducing the computational burden of visual localization while ensuring high-precision camera pose estimation. During training, the method integrates AP loss, soft similarity scores based on field-of-view (FoV) overlap, and an attention decorrelation loss to ensure that local features effectively complement one another and are aggregated into a reliable global representation. Experimental findings indicate that SuperGF not only provides significant advantages in terms of inference speed and memory efficiency but also remains competitive in accuracy, making it promising for applications in robotics, autonomous driving, augmented reality, and other related fields.

Publication

Song, Wenzheng, et al. “Globalizing Local Features: Image Retrieval Using Shared Local Features with Pose Estimation for Faster Visual Localization.” 2024 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2024.

@inproceedings{song2024globalizing,

title={Globalizing Local Features: Image Retrieval Using Shared Local Features with Pose Estimation for Faster Visual Localization},

author={Song, Wenzheng and Yan, Ran and Lei, Boshu and Okatani, Takayuki},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

pages={6290--6297},

year={2024},

organization={IEEE}

}