Symmetry-Aware Architecture for Enhanced Generalization in Embodied Visual Navigation

- Post by: admin

- 2025-03-27

- No Comment

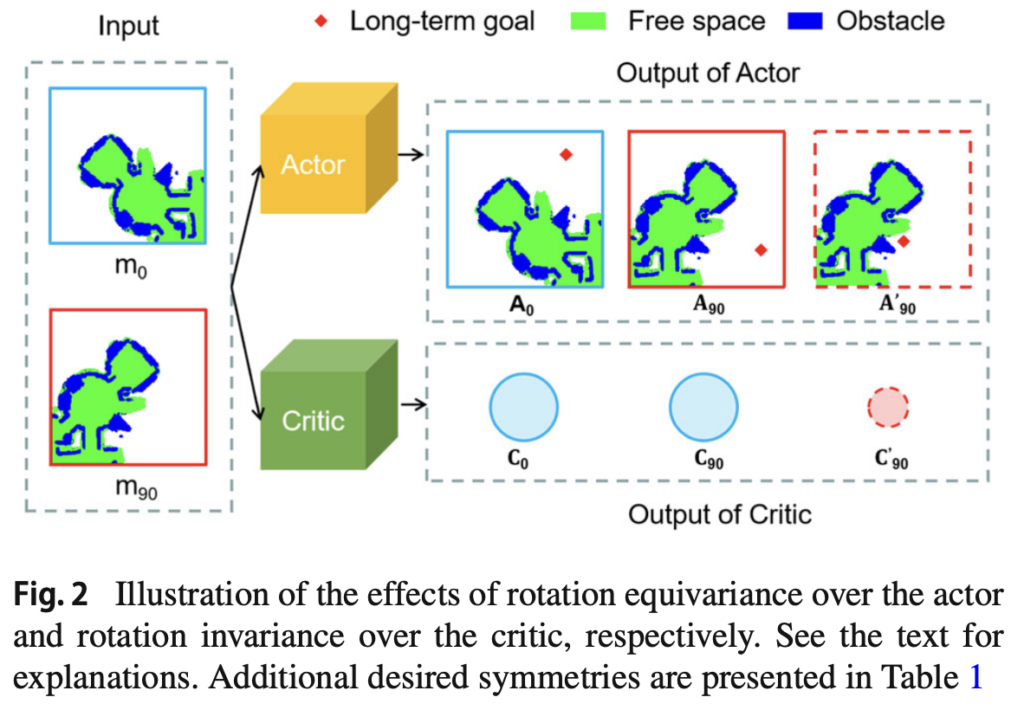

Embodied visual navigation, which is crucial in fields such as autonomous robotics and augmented reality, enables a robot to navigate and search for target objects in an unknown environment while localizing itself. However, existing deep reinforcement learning (RL) approaches often suffer from performance degradation in out-of-distribution environments due to statistical shifts between training and testing data, leading to limited generalization. This study aims to achieve a more robust navigation system by incorporating task-specific inductive biases—namely, equivariance and invariance with respect to input transformations—into the network. Specifically, using Active Neural SLAM (ANS) as the base framework, it is essential for the global policy networks of both the actor and the critic to possess ideal symmetries: the actor should be equivariant to translations and rotations of the input map, while the critic should be invariant to rotation. Conventional methods, however, do not sufficiently enforce these properties.

Proposed Method

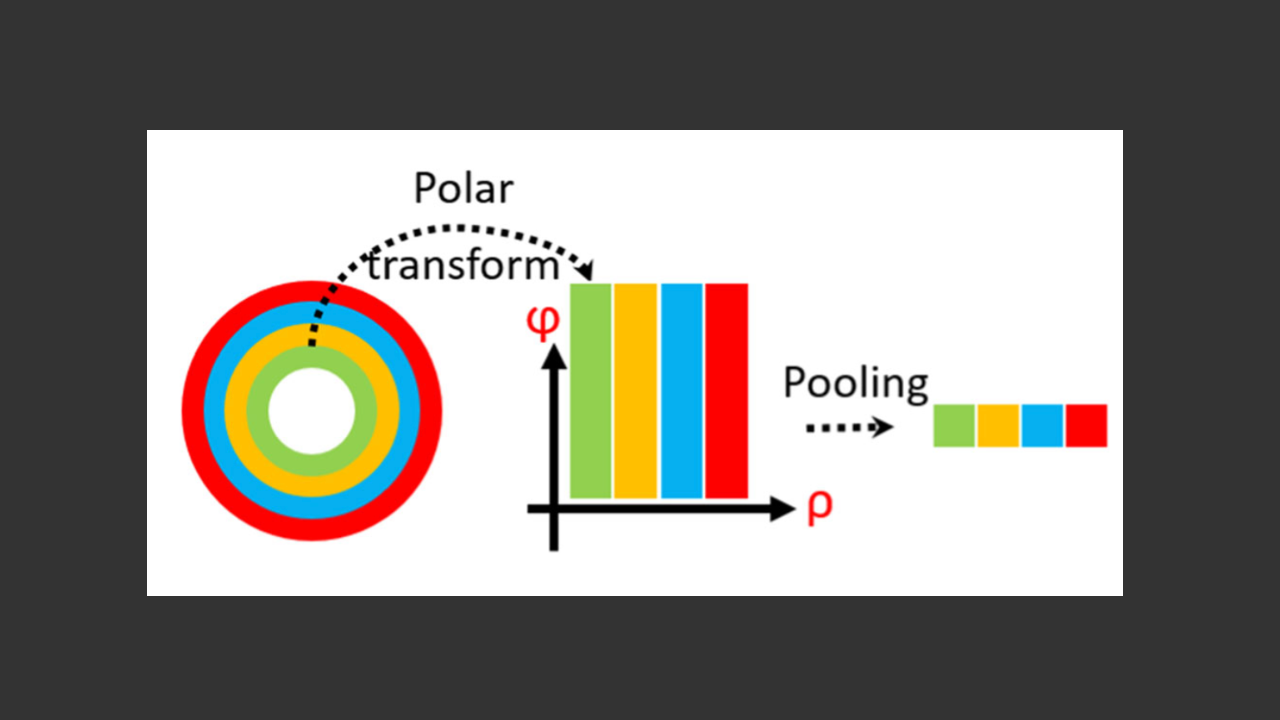

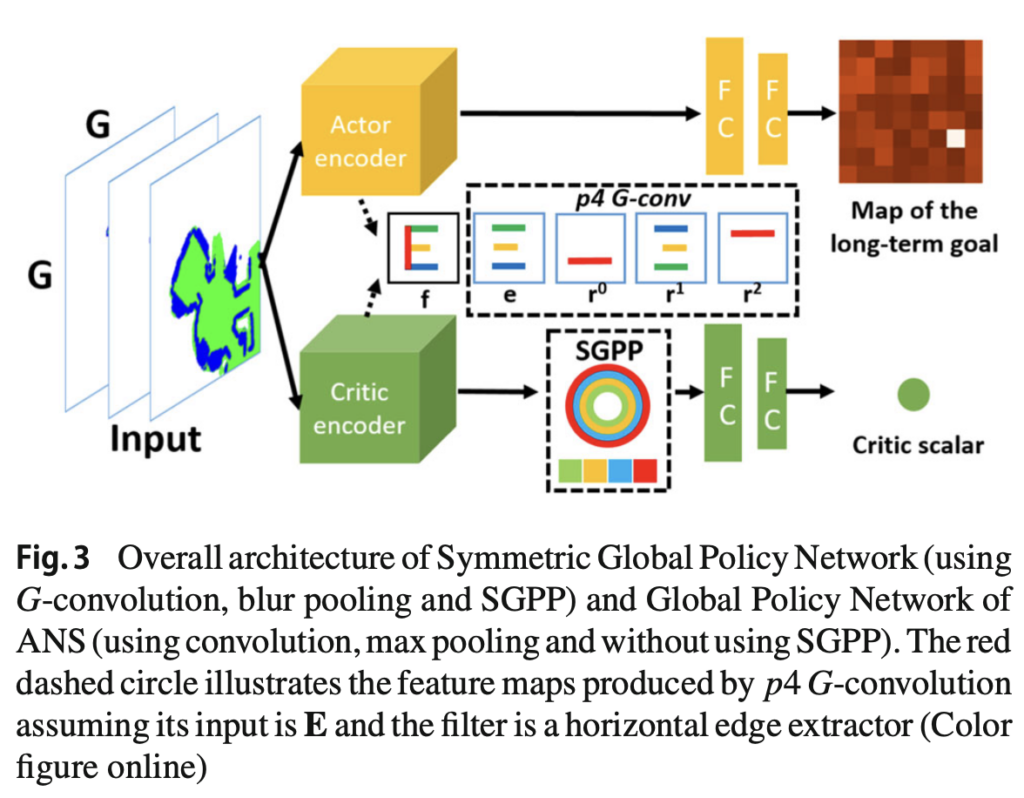

In this paper, we propose a novel neural architecture—referred to as the “Symmetry-aware Network”—that revises the global policy module of ANS so that the actor and critic networks inherently acquire the desired symmetries. For the actor, which outputs a long-term goal from an input map, we replace standard convolutional layers with p4 G-convolutions that achieve rotation equivariance and use blur pooling during downsampling to maintain translation equivariance. In contrast, the critic network, which produces a scalar representing the expected cumulative future reward from the input map, must be invariant to rotation. To accomplish this, we introduce a new component called the Semi-Global Polar Pooling (SGPP) layer at the end of the critic network. The SGPP layer performs a polar coordinate transformation followed by average pooling along the circumferential direction, thereby ensuring rotation invariance. This design allows both networks to reflect the geometric characteristics of the navigation task and learn robust representations that are less dependent on the training data.

Experimental Results

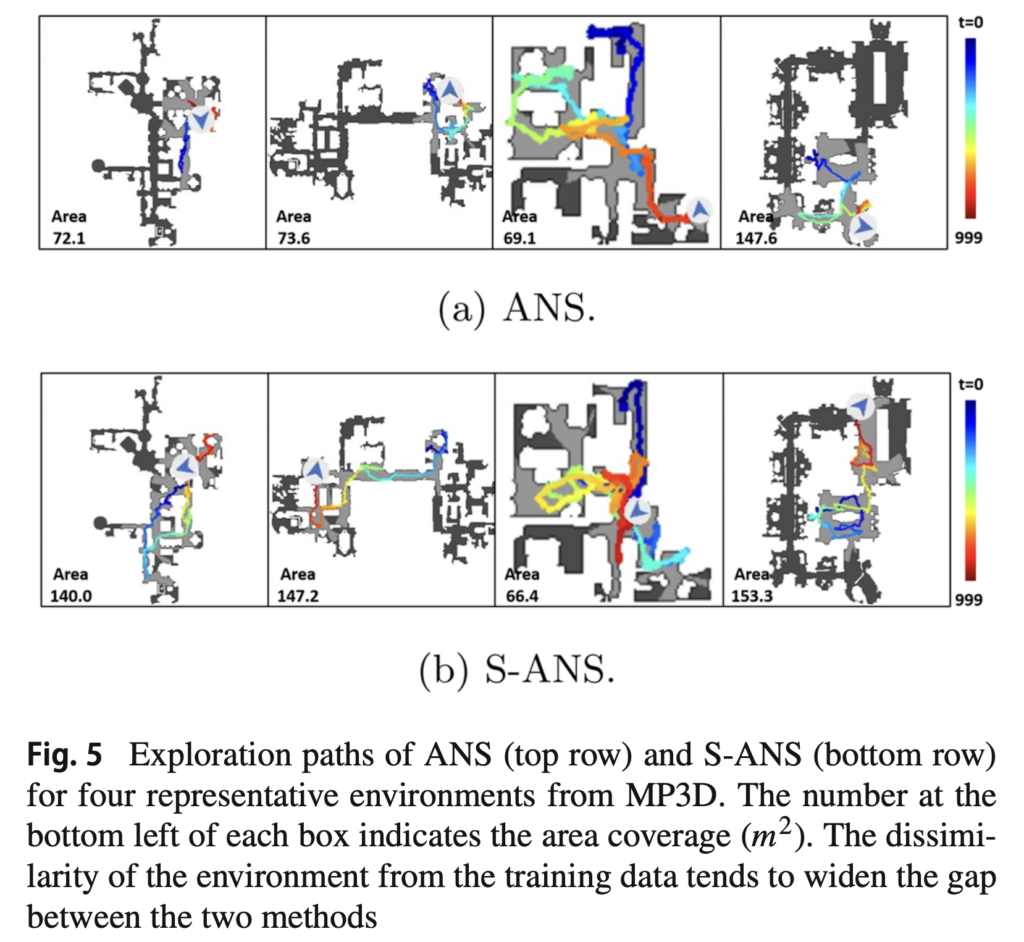

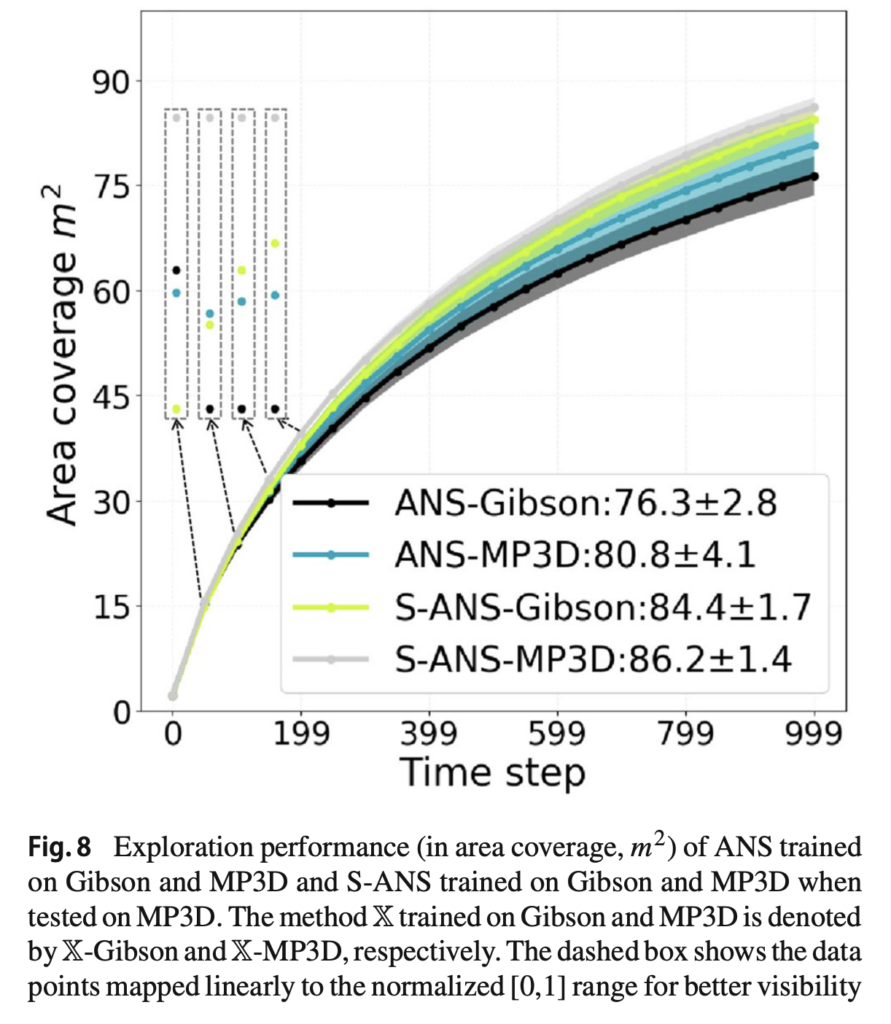

The proposed method is evaluated using realistic environment simulators such as Gibson and Matterport3D (MP3D) for both visual exploration and object goal navigation tasks. On both datasets, even when the training and testing environments differ, the symmetry-aware version of ANS (S-ANS) achieves significantly greater area coverage compared to the conventional ANS, demonstrating markedly improved generalization in unknown environments. In addition, for object goal navigation, the extension of SemExp to S-SemExp results in higher success rates, improved path efficiency (as measured by SPL), and reduced average time to reach the target object. Moreover, quantitative assessments of rotation invariance and analyses of internal feature representations reveal that the incorporation of G-convolutions and the SGPP layer greatly enhances the rotation invariance of the critic’s outputs. Although the use of G-convolutions does increase memory consumption, the overall integration of inductive biases leads to a robust navigation performance that surpasses traditional methods.

Conclusion

This study presents a novel neural architecture for embodied visual navigation that addresses generalization challenges by embedding task-specific symmetries—equivariance and invariance—into the network design. By employing p4 G-convolution, blur pooling, and the newly designed SGPP layer, the actor and critic networks are endowed with the ideal symmetries required for robust navigation. Experimental results on both visual exploration and object goal navigation tasks demonstrate that our approach significantly outperforms conventional ANS and SemExp methods, particularly in test environments that differ substantially from the training conditions. By effectively leveraging inductive biases, our method mitigates overfitting in RL and contributes to the development of highly generalizable navigation models, paving the way for future real-world applications and further improvements in computational efficiency.