A Graph Network Approach to Fast Bundle Adjustment for Optimized SLAM

- Post by: admin

- 2025-03-27

- No Comment

In the fields of Structure-from-Motion (SfM) and visual SLAM (Simultaneous Localization and Mapping), Bundle Adjustment (BA) is a crucial process that optimizes camera poses and the positions of 3D landmarks. In practice, many visual SLAM systems perform BA locally on the most recent keyframes and their associated landmarks to maintain overall system accuracy and tracking stability. However, conventional BA methods are based on iterative optimization techniques such as the Levenberg-Marquardt (LM) method, which impose a very high computational load. This becomes a bottleneck in execution time, especially in embedded systems with limited computational resources, leading to real-time performance issues. When local BA takes too long, new keyframes cannot be generated, increasing the risk of tracking failures.

Proposed Method

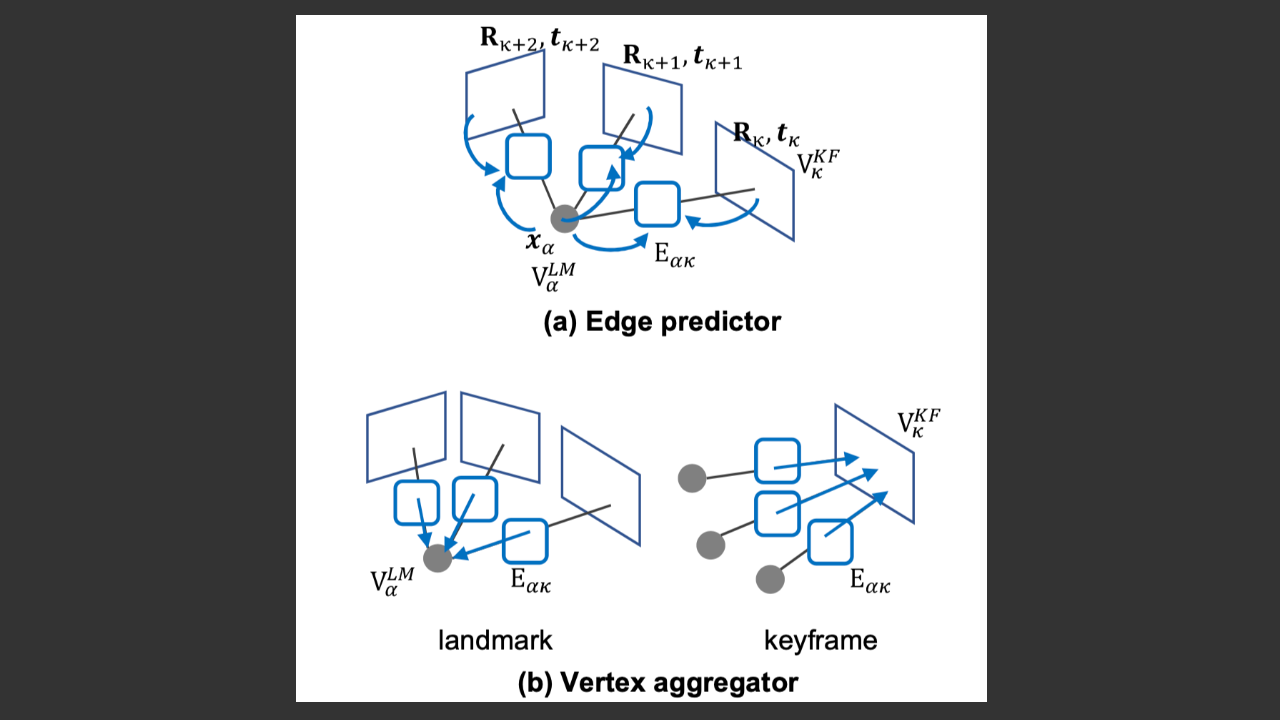

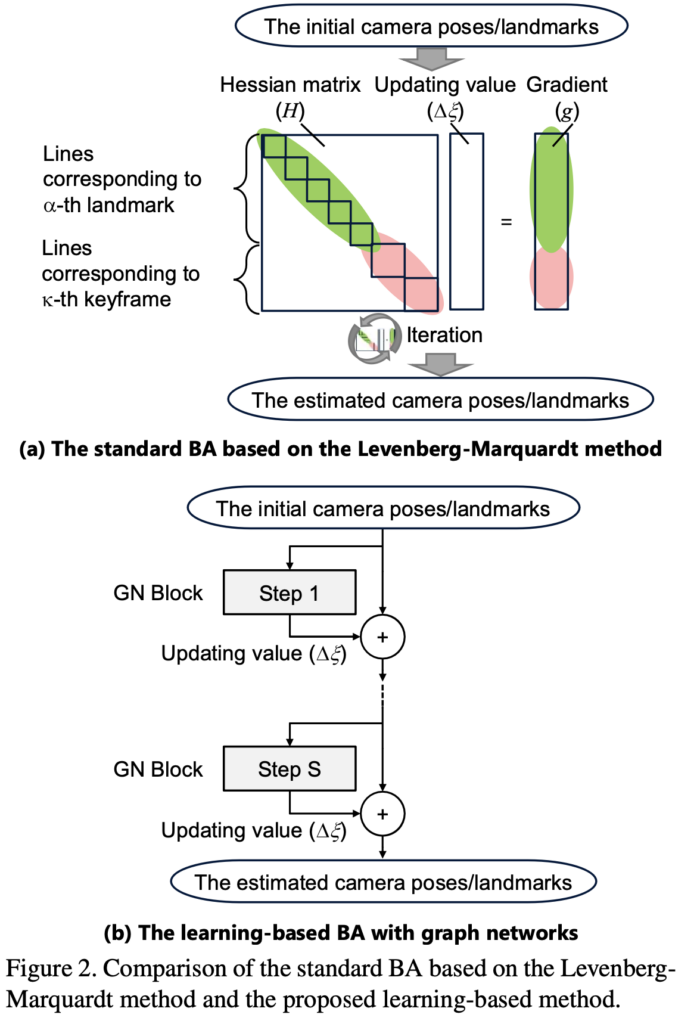

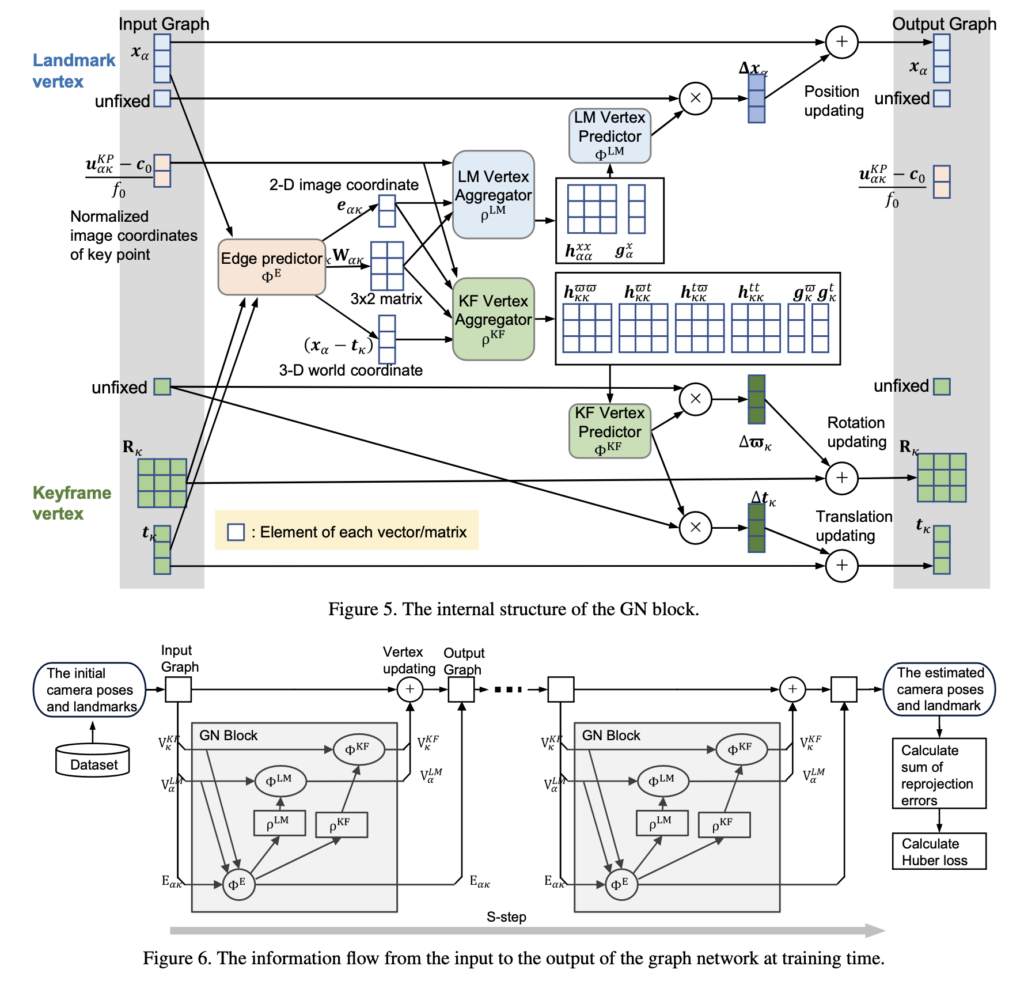

In this study, we propose an approach that replaces conventional optimization methods with a learning-based BA method using a graph network. In the proposed method, keyframes (representing camera poses) and landmarks (3D points) are treated as nodes, and the visibility relationships between them are represented as edges in a graph structure. The graph network takes this graph as input and directly predicts the parameter updates by utilizing an intermediate representation inspired by the block-diagonal elements of the normal equations and gradient information from the LM method. Specifically, at each update step, a GN (Graph Network) block is applied, and after a fixed number of iterative processes, the final parameter estimates are output. The network is trained similarly to conventional BA by minimizing the loss computed as the sum of reprojection errors using the Huber loss, with pairs of initial parameters and their optimized values as training data.

Experimental Results

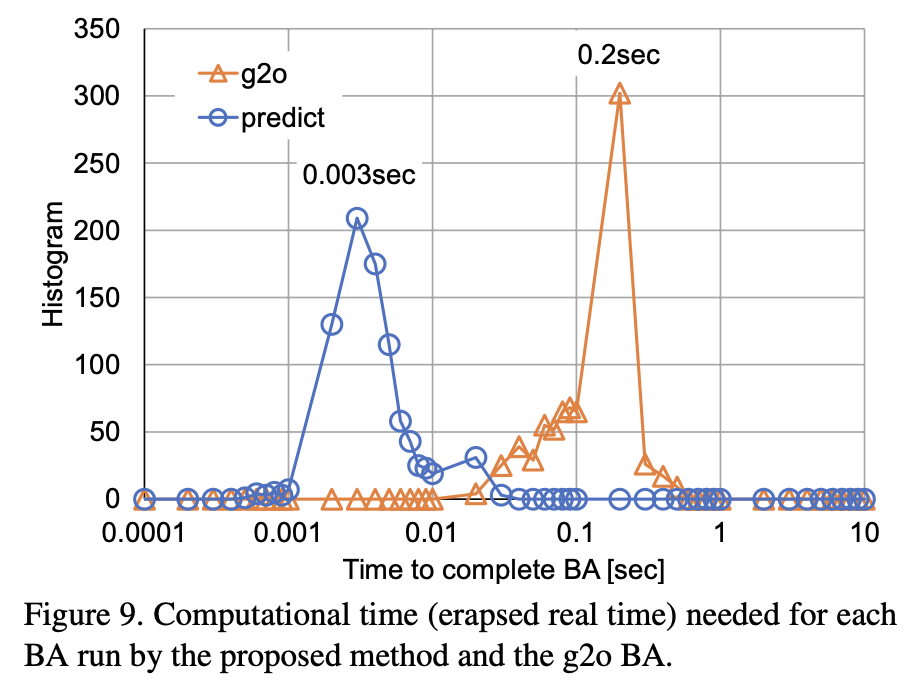

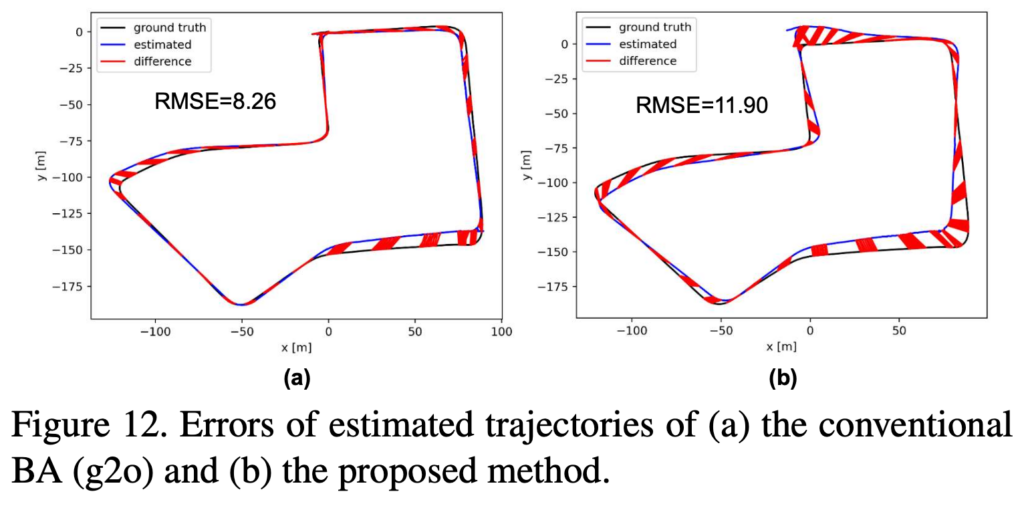

Experiments were primarily conducted on the KITTI dataset, comparing the proposed method with a conventional optimization-based BA using g2o. Although the proposed method showed slightly inferior reprojection error on individual local BA runs compared to conventional methods, its computation time was reduced dramatically to approximately 1/60–1/10 of that of the conventional approach. Furthermore, when the g2o BA was replaced by the proposed method within the full SLAM pipeline, the acceleration in local BA processing enabled more frequent generation of keyframes, thereby enhancing the overall tracking robustness of the system. This improvement is expected to contribute to the realization of practical SLAM systems even in environments with limited computational resources.

Conclusion

This paper proposes an approach that replaces the conventional Levenberg-Marquardt-based BA with a learning-based method utilizing a graph network. By predicting parameter updates using an intermediate representation (comprising the block-diagonal Hessian elements and gradient information from the LM method) on a bipartite graph constructed from keyframes and landmarks, the iterative optimization process is significantly accelerated. Experimental results demonstrate that the proposed method dramatically reduces computation time compared to the conventional g2o BA, thereby improving the real-time performance and robustness of the overall SLAM system while incurring only a slight decrease in accuracy. Future work is expected to further balance accuracy and speed through improvements in network architecture and intermediate representations, paving the way for applications in real-world embedded systems.

Publication

Tanaka, Tetsuya, Yukihiro Sasagawa, and Takayuki Okatani. “Learning to bundle-adjust: A graph network approach to faster optimization of bundle adjustment for vehicular SLAM.” Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021.

@inproceedings{tanaka2021learning,

title={Learning to bundle-adjust: A graph network approach to faster optimization of bundle adjustment for vehicular SLAM},

author={Tanaka, Tetsuya and Sasagawa, Yukihiro and Okatani, Takayuki},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={6250--6259},

year={2021}

}